Understanding OpenAI API Cost In-Depth Using a Real Example

Author’s update 5/6/23

This article uses GPT-3 costs, but the use case, including the formulas and cost structure still apply to the GPT-3.5 Turbo chat model and GPT-4 prompt and completion models. The great thing about Open AI pricing is all the various models use token-based pricing, so this article’s usefulness should stand the test of time.

For example, just yesterday I had an executive ask me to quote them on the cost of having GPT-3.5 Turbo prompts created that will write a 750 word article and translate it into Spanish. I literally visited this article I wrote, and used the formula, simply plugging in the new $0.002 cost of GPT-3.5. My response in the email went like this:

Hello executive,

Let’s assume 25% prompt bloat and $125 / hour at 40 hours for initial prompt design. The onetime prompt cost will be $5,000.00. The write of 750 words and translation of 750 words will be the same cost each, assuming they both fall within the 25% prompt bloat on words. With that said, here is the cost for each article written or translated.

Cost per article written OR article translated = 750 * 1.25 = 937.5 / .75 = 1,250 * .000002 = $0.0025 - 750 = Words

- 1.25 = Add 25% to words to account for the prompt(s)

- 937.5 = Total words to be processed

- .75 = 1,000 tokens is roughly 750 words, so we divide our word count by .75 to convert to tokens

- 1,250 = Total tokens to process and pay for

- .000002 = Cost per token by using $0.002 / 1,000 tokens from OpenAI’s website

Understanding Open AI Pricing / Your Cost

Are you considering using OpenAI’s GPT3 AI prompts to rewrite text, design code, or simply answer people’s questions like the newly released ChatGPT chatbot? If you are, some questions likely come to mind. How do you signup for an account? How do you design and test prompts? How much does it cost and how does the cost structure work?

For the first few questions, such as where and how to register an OpenAI account and how to get started with designing GPT3 prompts, wordbot Cofounder Kevin Sims has an insightful post he wrote last year titled Learning The OpenAI Playground Step-By-Step. For the cost question, we’ve got you covered with this post.

In this article, we’re going to focus on the cost of OpenAI’s GPT3 base language models. We’ll breakdown how GTP3 cost works using formulas and real examples from our wordbot.io app. We will not cover GPT3 cost for image models or fine-tuned language models.

GPT3 Cost / Pricing Overview

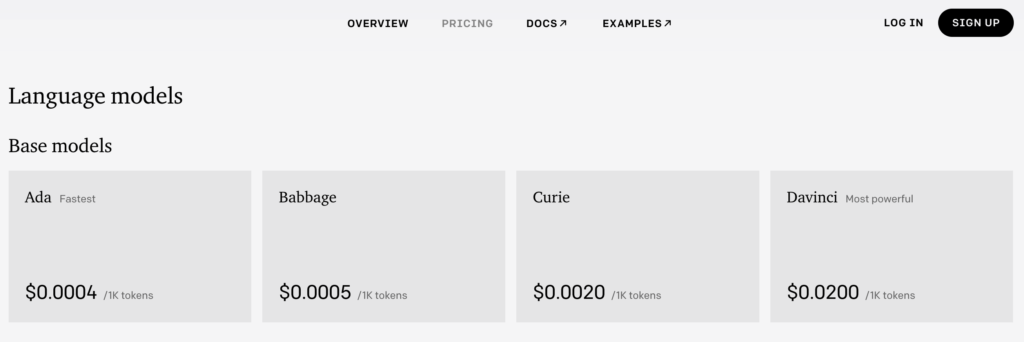

To view OpenAI’s pricing (which is your GPT3 cost) navigate to openai.com, scroll down, and click on the Pricing link located under the API section. If you want to cheat, simply visit the pricing page directly by navigating to https://openai.com/api/pricing. At the time of this writing, you’ll see the below pricing for their base language models.

As mentioned above, in this post we’re going to be covering only the GPT3 cost for the base language models, so before we get into cost details, let’s review what exactly that means to ensure you understand what you’re reading in the remainder of this article.

The Difference Between GPT3 Models

When visiting the pricing page on OpenAI’s website, you’ll see three broad types of models – base language models, fine-tuned language models, and image models. All these models are controlled with prompts.

Base Language Model, Covered In This Post

There are four base language models, Ada, Babbage, Curie, and Davinci. Ada is the fastest and cheapest model while Davinci is the most powerful and most expensive. We at wordbot integrated the Curie and Davinci models into our product, which for rewriting and generating text produces excellent quality at affordable price points.

Fine-Tuned Language Model, Not Covered

Fine-tuned language models are models you create yourself by fine-tuning the base models. For example, you can train the Curie model by regularly feeding it data that represents the input and expected output. This allows the model to better understand what you expect from it. Because you must train these models, when doing fine-tuning you will incur both training and usage costs. You can learn more about the fine-tuning pricing model here.

Image Model, Not Covered

You can use OpenAI’s DALL-E model for creating images and art. The cost is by image generated and resolution (image size), with three different resolutions and prices available.

With the different model definitions explained, let’s begin our in-depth look at how the base GPT3 language models structure their cost and how to perform some useful conversions for understanding it better. For the rest of this article, you can assume we’re referring to the base language models.

GPT3 Cost Structure

GPT3 cost is done in tokens, or more specifically sets of 1,000 tokens. Confusing right? For example, the Davinci model costs $0.02 per 1,000 tokens. What is a token you ask? Great question. As defined by OpenAI a token is approximately:

- 4 characters

- .75 words

Well, that just made things even more confusing so let’s look at a quick example. Let’s calculate our total cost for 10,000 tokens and then convert those tokens into characters and words.

Total Cost = (10,000 tokens / 1,000 tokens) * $0.02 = $.20 or 20 cents

We now know for 10,000 tokens, our total GPT3 cost is 20 cents, but how many characters and words is that? Let’s do the math and see what we get for our 20 cents.

Characters = 10,000 tokens * 4 characters = 40,000 characters

Words = 10,000 tokens * .75 words = 7,500 words

Just a note – sometimes for word calculations I prefer to use the standard 4.7 character average in the English language instead of GPT3’s .75 words per token. If I do this, I get:

Words = 40,000 characters / 4.7 characters per English word = 8,511 words

As you can see, there is a sizable different in word counts when using the average characters per word for your language or OpenAI’s .75 rule of thumb. Take this into consideration if you’re using your GPT3 cost to price a software product you’ve built. With the initial cost calculations under our belt, let’s learn where and how to monitor our usage and cost.

Viewing Your GPT3 Cost & Usage

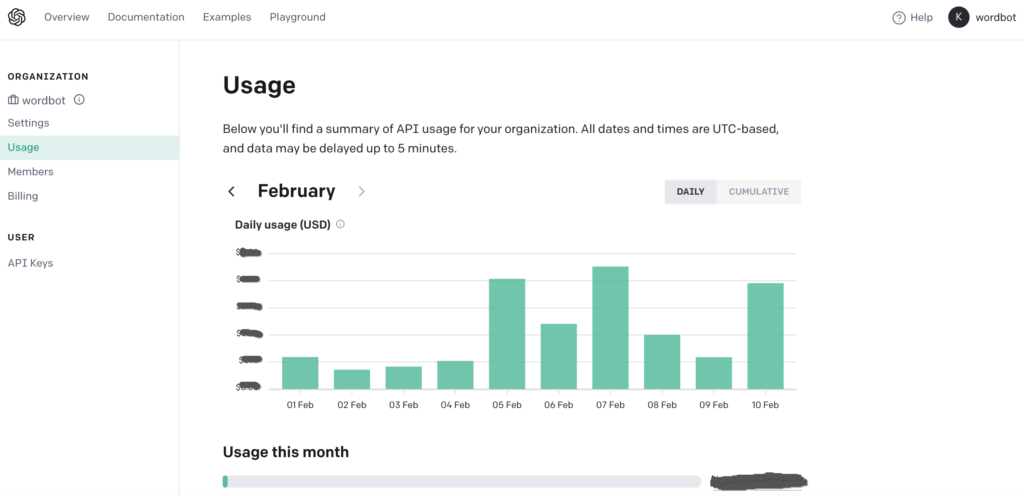

I monitor our cost weekly to ensure no users our abusing wordbot.I do this by viewing the Usage tab in our OpenAI account, which shows our GPT3 cost by day per month. I can also see cumulative growth over the course of the month. The visualizations are graphs, with your cost on the Y axis and the days on the X axis. Below are the steps to find your usage.

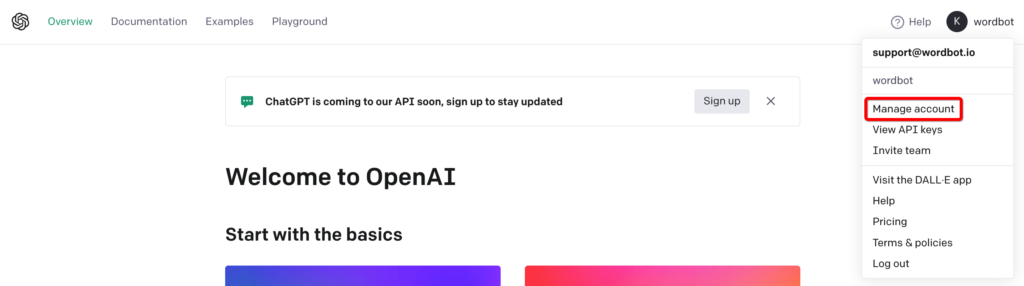

Login to OpenAI and Manage Your Account

- Visit openai.com

- Scroll to the bottom and click Login under the API section

- Click your profile icon in the top right and select Manage Account

The Usage Tab

After clicking Manage Account, you should land on the Usage tab. If not, simply click the Usage link on the left under Organization.

This screen shows your usage by day by month, with vertical bars representing your GPT3 cost. The graph defaults to by day for the current month, but you can switch it to show cumulative to see your cost growth over the course of the month. To do this, click the toggle in the top right as shown below.

Understanding Your Monthly Usage Limit

OpenAI limits your usage, although the limit can be easily raised. In the screenshot above, you can see we’ve used little of our February limit. I darkened out our actual usage, but it reads like Usage $ / Usage Limit $. So for example, if you have a $5,000 monthly limit and have used $3,450 worth of services the barometer would read like this:

$3,450.00 / $5,000.00

How To View Daily Usage Details

On the bottom of the Usage tab, you can see your exact usage by day. You can drill into which GPT3 model you used, how many requests were made to it, and how many tokens were used, both from your prompt text and the completion text. Below is an example from someone using wordbot.io

How to Monitor and Change Your Usage Limit

If your usage is growing rapidly, you can reach out to OpenAI and have it raised. We’ve done this a few times and never had an issue. They responded quickly and promptly increased our monthly limit.

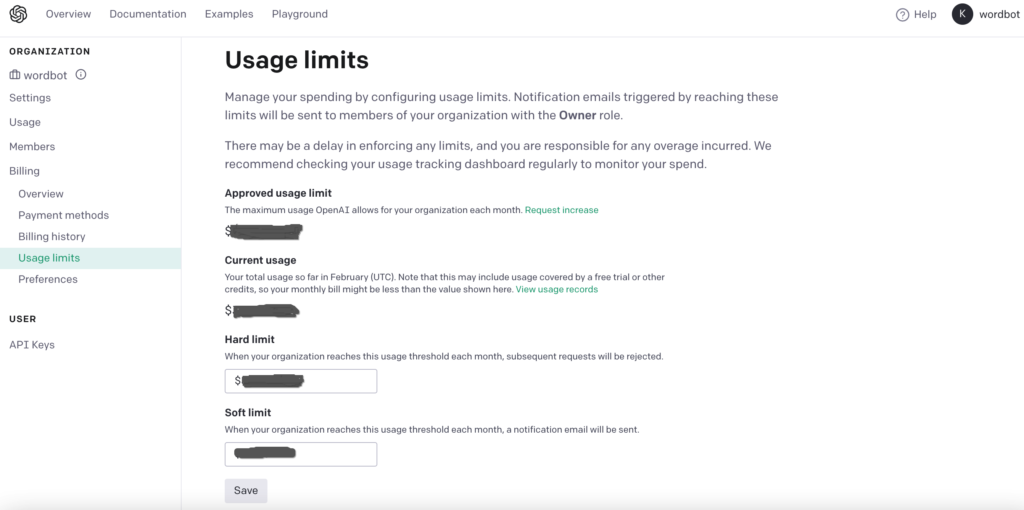

Another fantastic tool for managing your GPT3 cost usage limits is the Usage Limits tab located under Manage Account > Billing > Usage Limits. In this tab, you can:

- See your approved OpenAI GPT3 Limit in Dollars

- Request to have that limit raised by clicking a link

- See your current GPT3 usage in Dollars

- Click a link to view your usage details, which is the Usage tab we reviewed above

- Set your Hard Limit, which is the limit that when hit your GPT3 requests will not be processed. This is great for protecting yourself against rogue customers, prompts, etc.

- Set a Soft Limit, which emails you when hit. Think of this as a warning.

See below an example of this useful tab from our wordbot.io OpenAI account.

Understanding Which Texts Counts Toward Your GPT3 Cost

You control GPT3 with prompts, which are textual commands. We have many examples of these commands littered across our blog. Each command is sent to the GPT3 model, which returns a completion, or the result text.

It’s important to understand when using GPT3 that your prompt (or instructional text) does count against your usage, so when factoring in your cost it is imperative that you don’t forget about the textual instructions you include in the prompt. Prompts can get long and drastically increase your cost, especially when integrating GPT3 into a software platform, so be sure to factor this into your cost and pricing decisions.

A Detailed GPT3 Cost Example

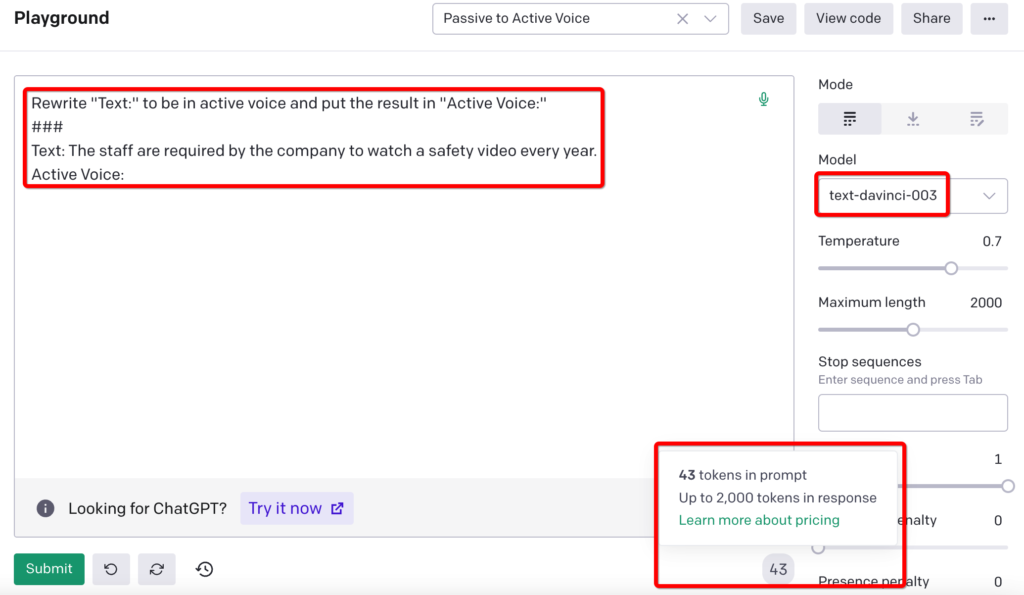

Let’s now look at a detailed example using a prompt we designed for wordbot. This prompt will accept text from a user and convert it from passive voice to active voice. This is a common use across AI content generation platforms because it is well known that active voice content engages readers much more than passive voice, yet many people write in passive voice. Below is the prompt we designed in the OpenAI Playground and integrated into our software stack.

Notice a few very important things. First, we’re using the Davinci model, which cost $0.02 per 1,000 tokens. We’ll use this later in the example to determine our cost from running this prompt. Second, notice at the bottom of the above screenshot our prompt has 43 tokens (circled in the bottom of the screenshot). Let’s click the Submit button, run this prompt, and view the results in the below screenshot.

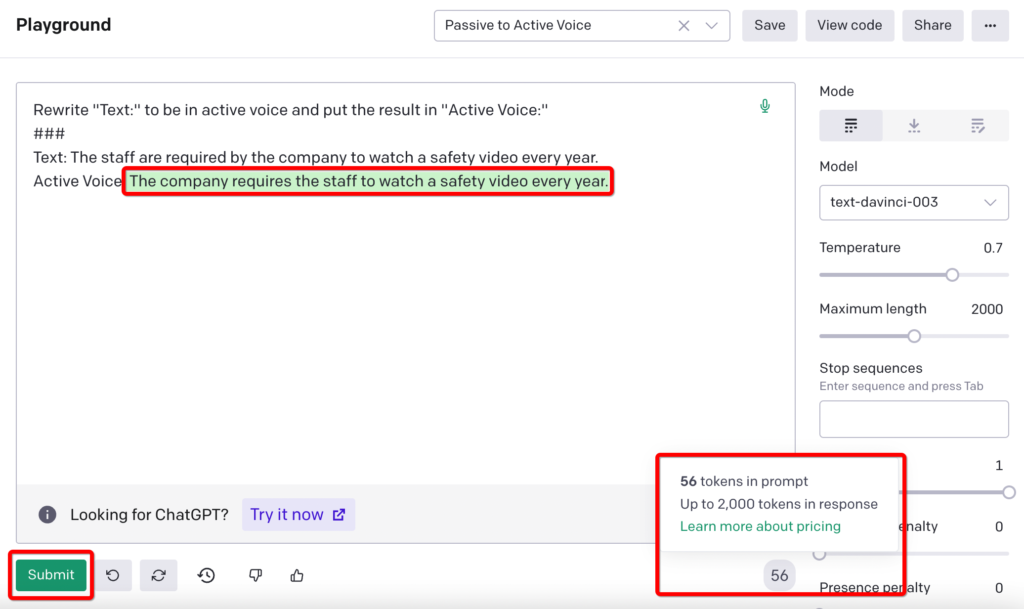

Notice first that the prompt worked and converted our text to active voice from passive, yeah! If you need a passive to active voice GPT3 prompt, feel free to use this one, but I digress. Notice our tokens used now says 56 instead of 43. Let’s calculate our GPT cost in detail from from running the above prompt.

Data For Calculating GPT3 Cost

- 1,000 tokens = $0.02

- 1 Token = 4 characters

- 1 Token = .75 words

- Prompt tokens: 43

- Completion Tokens: 56 – 43 = 13

GPT3 Cost For This Prompt and Completion

Cost Per Token = $0.02 / 1,000 tokens = $0.00002

Prompt Cost = 43 tokens * $0.00002 = $0.00086

Completion Cost = 13 tokens * $0.00002 = $0.00026

Total Cost = $0.00086 + $0.00026 = $0.00112

Cost Per Character = $0.00112 / (56 tokens * 4 characters per token) = $0.00112 / 224 characters = $0.000005 cost per character

Cost Per Word = $0.00112 / (56 tokens * .75 words) = $0.00112 / 42 words = $0.00002667 cost per word

We can see this passive to active prompt and completion cost us just over a 10th of a penny. So a wordbot user could do 10 of these conversions and assuming each sentence is about the same size as this example, and it would cost us a penny.

GPT3 Cost For Generating a 2,950-Word Article With a 50-Word Prompt

The above cost seems insignificant, but it isn’t. When you account for many thousands of users running thousands of prompts, the cost can be significant. To demonstrate this in a small way, let’s do a quick GPT3 cost calculation on a user generating a 2,950 word article. Our prompt will be 50 words. We’ll use the Davinci mode again, which is $0.02 per 1000 tokens.

Words = 3,000 (50 from the prompt + 2,950 from the completion)

Tokens = 4,000 (3,000 words / .75 tokens per word)

Cost = $0.08 or 8 cents (4,000 tokens / 1,000 tokens * $0.02 cost per 1,000 tokens)

Below is the entire function written out as one.

Total GPT3 Cost = Prompt words of 50 + 2,950 words of completion = 3,000 total words / .75 tokens per word = 4,000 tokens / 1,000 tokens = 4 * $0.02 cost per 1,000 tokens = $0.08

With what we’ve learned in this article, we were able to quickly calculate that generating a 2,950 word article from a 50 word AI prompt cost us 8 cents.

Why The Above GPT3 Cost Calculations Matter

Calculating cost per character and cost per word are musts if you’re designing software that you’ll sell to others. This is because customers / users don’t understand what tokens are, so you’ll want your pricing packages to be in the form of character or word limits, which as a consumer they easily understand.

Conclusion

As everyone knows by now from the popularity of ChatGPT, OpenAI’s GPT3 and soon to be GPT4, AI is a game changer for business, software developers, and society as a whole. Not only are its capabilities amazing, but as you saw in this post it’s also very affordable. The GPT3 cost is low enough to encourage software developers and businesses across the globe to immediately begin adapting it into their tech stacks. But doing so comes with costs and understanding those costs so you can properly price your products and make money for the value you provide is equally important. We hope this article helped.